Data Producer Application Generates Events

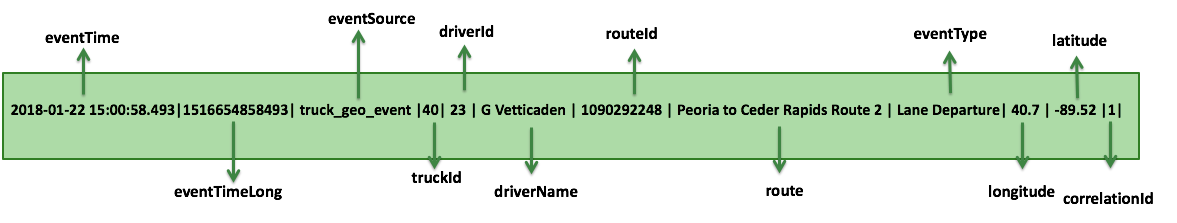

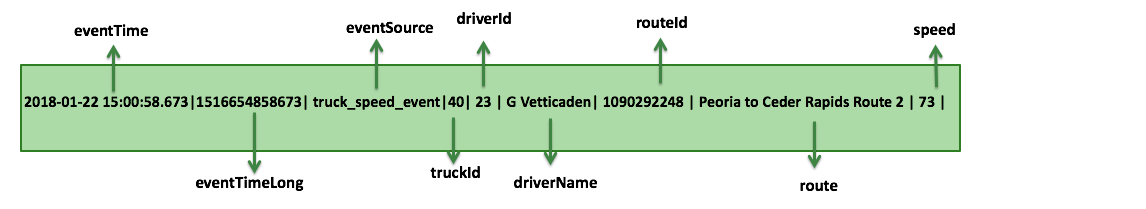

The following is a sample of a raw truck event stream generated by the sensors.

Geo Sensor Stream

Speed Sensor Stream

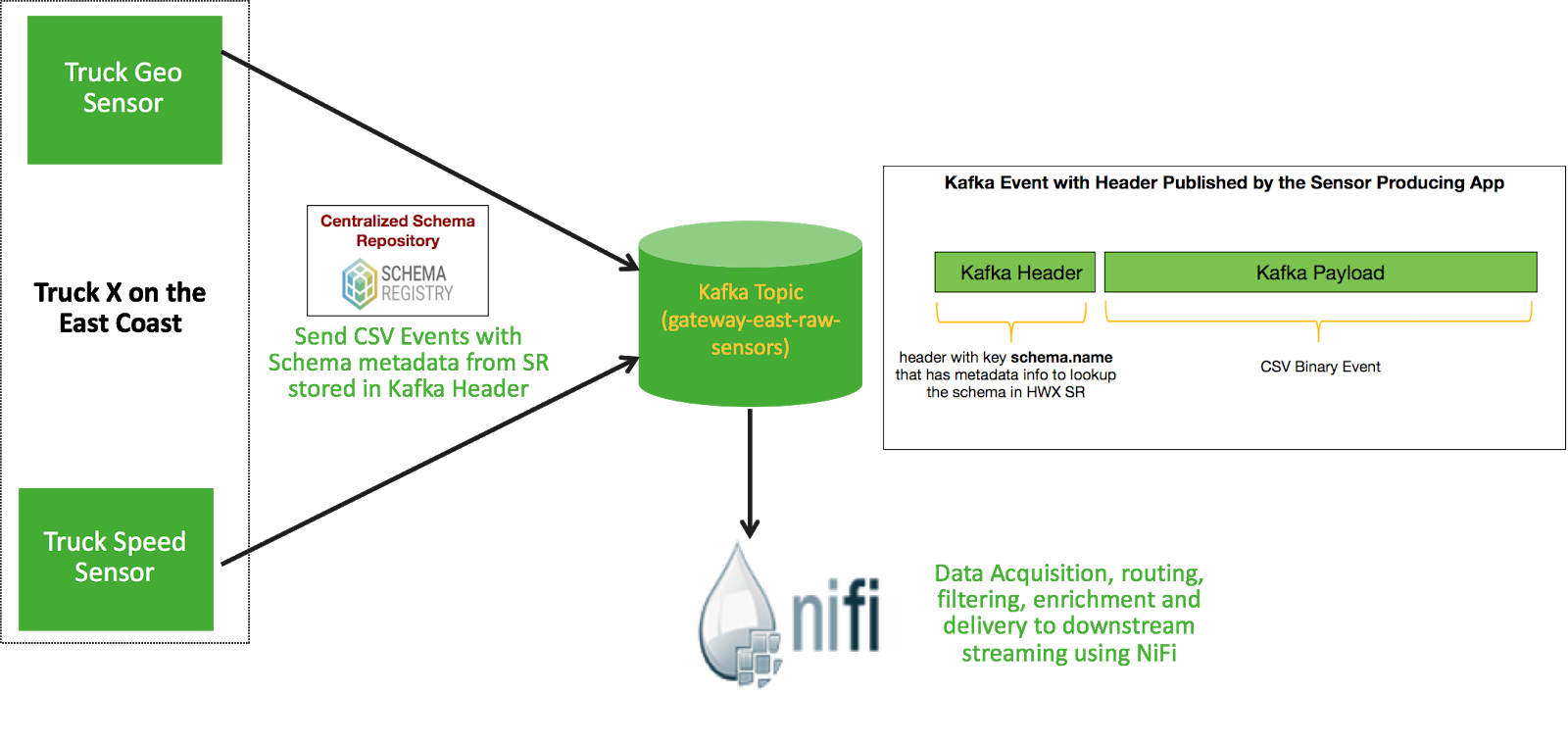

The date producing application or data simulator publishes CSV events with schema name in the Kafka event header (leveraging the Kafka Header feature in Kafka 1.0). The following diagram illustrates this:

Use NiFi's Kafka 1.X ConsumeKafkaRecord and PublishKafkaRecord processors using record-based processing to do the following:

- Grab the schema name from Kafka Header and store in flow attribute called schema.name

- Use the schema name to look up the Schema in HWX SR

- Use the schema from HWX SR to convert to ProcessRecords

- Route events by event source (speed or geo) using SQL

- Filter, Enrich, Transform

- Deliver the data to downstream syndication topics

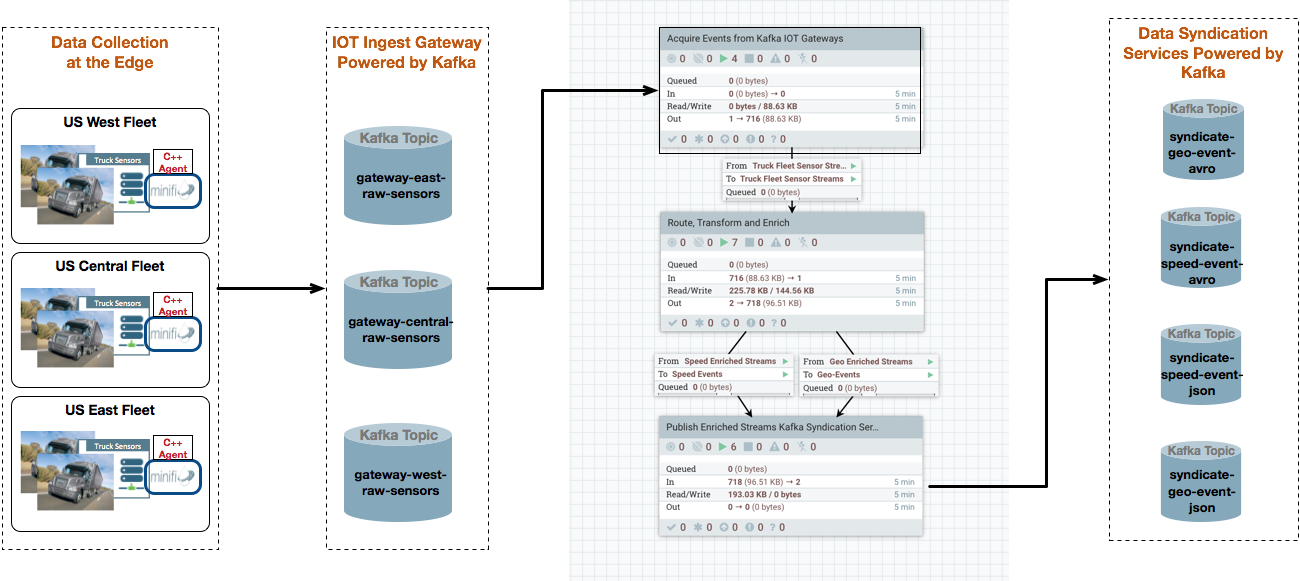

The below diagram illustrates the entire flow and the subsequent sections walks through how to setup this data flow.