The HDFS repository contains access policies for the Hadoop cluster HDFS. The Security Agent integrates with the NameNode service on the NameNode host. The agent enforces the policy's configured in the HDP Security Administration Web UI and sends HDFS audit information to the portal where it can be viewed and reported on from a central location.

![[Warning]](../common/images/admon/warning.png) | Warning |

|---|---|

In Ambari managed environments additional configuration is required. Ensure that you carefully follow the steps outlined in the Configure Hadoop Agent to run in Ambari Environments. |

Add HDFS repositories after the Hadoop environment is fully operational. During the initial set up of the repository, Hortonworks recommends testing the connection from the HDP Security Administration Web UI to the NameNode to ensure that the agent will be able to connect to the server after installation is complete.

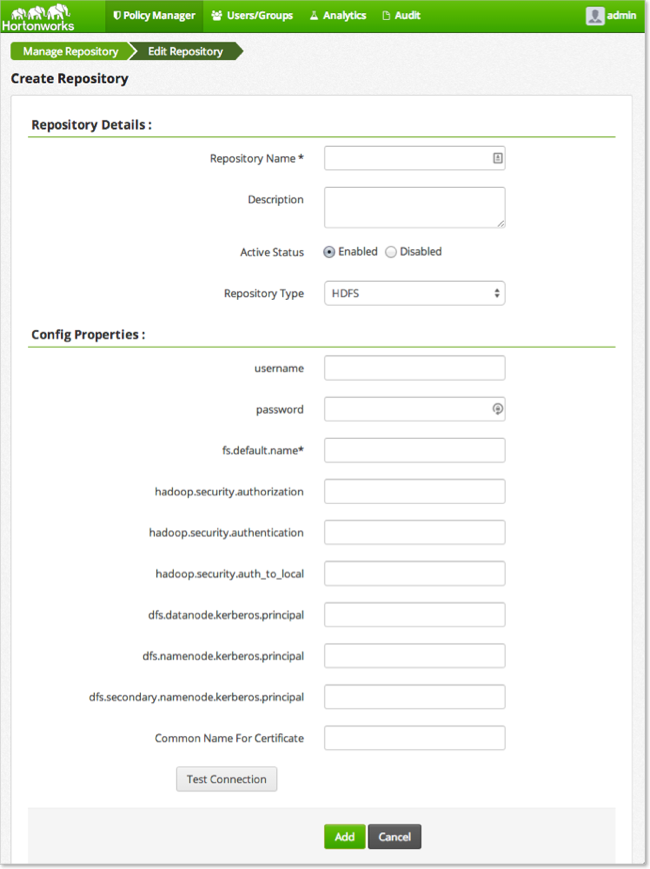

Before installing the agent on the NameNode, create a HDFS Repository as follows:

Sign in to the HDP Security Administration Web UI and click .

Next to HDFS, click the + (plus symbol).

The Create Repository page displays.

Complete the Repository Details:

Table 4.1. Policy Manager Repository Details

Label Value Description Repository Name $nameSpecify a unique name for the repository, you will need to specify the same repository name in the agent installation properties. For example, clustername_hdfs.Description $description-of-repoEnter a description up to 150 characters. Active Status EnabledorDisabledEnable or disable policy enforcement for the repository. Repository type HDFS,Hive, orHBaseSelect the type of repository, HDFS. User name $userSpecify a user name on the remote system with permission to establish the connection, for example hdfs.Password $passwordSpecify the password of the user account for connection. Complete the security settings for the Hadoop cluster, the settings must match the values specified in the

core-site.xmlfile as follows:Table 4.2. Repository HDFS Required

Label Value Description fs.default.name $hdfs-urlHDFS URL, should match the setting in the Hadoop core-site.xmlfile. For example,hdfs://sandbox.hortonworks.com:8020hadoop.security.authorization trueorfalseSpecify the same setting found in the core-site.xml. hadoop.security.authentication simpleorkerberosSpecify the type indicated in the core-site.xml.hadoop.security.auth_to_local $usermappingMust match the setting in the core-site.xml file. For example: RULE:[2:$1@$0]([rn]m@.*)s/.*/yarn/ RULE:[2:$1@$0](jhs@.*)s/.*/mapred/ RULE:[2:$1@$0]([nd]n@.*)s/.*/hdfs/ RULE:[2:$1@$0](hm@.*)s/.*/hbase/ RULE:[2:$1@$0](rs@.*)s/.*/hbase/ DEFAULTdfs.datanode.kerberos.principal $dn-principalSpecify the Kerberos DataNode principal name. dfs.namenode.kerberos.principal $nn-principalSpecify the Kerberos NameNode principal name. dfs.secondary.namenode.kerberos.principal $secondary-nn-principalSpecify the Kerberos Secondary NN principal name. Common Name For Certificate $cert-nameSpecify the name of the certificate. Click .

If the server can connect to HDFS, the connection successful message displays. If the connection fails, go to the troubleshooting appendix.

After making a successful connection, click .

Install the agent on the NameNode Host as root (or sudo

privileges). In HA Hadoop clusters, you must also install an agent on the

Secondary NN.

Perform the following steps on the Hadoop NameNode host.

Log on to the host as

root.Create a temporary directory, such as

/tmp/xasecure:mkdir /tmp/xasecure

Move the package into the temporary directory along with the MySQL Connector Jar.

Extract the contents:

tar xvf $xasecureinstallation.tar

Go to the directory where you extracted the installation files:

cd /tmp/xasecure/xasecure-$name-$build-version

Open the

install.propertiesfile for editing.Change the following parameters for your environment:

Table 4.3. HDFS Agent Install Parameters

Parameter Value Description POLICY_MGR_URL$urlSpecify the full URL to access the Policy Manager Web UI. For example, http://pm-host:6080.MYSQL_CONNECTOR_JAR$path-to-mysql-connectorAbsolute path on the local host to the JDBC driver for mysql including filename.[a] For example, /tmp/xasecure/REPOSITORY_NAME$Policy-Manager-Repo-NameName of the HDFS Repository in the Policy Manager that this agent connects to after installation. XAAUDIT.DB.HOSTNAME$XAsecure-db-hostSpecify the host name of the MySQL database. XAAUDIT.DB.DATABASE_NAME$auditdbSpecify the audit database name that matches the audit_db_namespecified during the web application server installation.XAAUDIT.DB.USER_NAME$auditdbuserSpecify the audit database name that matches the audit_db_userspecified during the web application server installationXAAUDIT.DB.PASSWORD$auditdbupwSpecify the audit database name that matches the audit_db_passwordspecified during the web application server installation.Save the

install.propertiesfile.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

If your environment is configured to use SSL, modify the properties following the instructions in Set Up SSL for HDFS Security Agent. |

The following is an example of the Hadoop Agent install.properties

file with the MySQL database co-located on the XASecure

host:

# # Location of Policy Manager URL # # # Example: # POLICY_MGR_URL=http://policymanager.xasecure.net:6080 # POLICY_MGR_URL=http://xasecure-host:6080 # # Location of mysql client library (please check the location of the jar file) # MYSQL_CONNECTOR_JAR=/usr/share/java/mysql-connector-java.jar # # This is the repository name created within policy manager # # Example: # REPOSITORY_NAME=hadoopdev # REPOSITORY_NAME=sandbox # # AUDIT DB Configuration # # This information should match with the one you specified during the PolicyManager Installation # # Example: # XAAUDIT.DB.HOSTNAME=localhost # XAAUDIT.DB.DATABASE_NAME=xasecure # XAAUDIT.DB.USER_NAME=xalogger # XAAUDIT.DB.PASSWORD= # # XAAUDIT.DB.HOSTNAME=xasecure-host XAAUDIT.DB.DATABASE_NAME=xaaudit XAAUDIT.DB.USER_NAME=xaaudit XAAUDIT.DB.PASSWORD=password # # SSL Client Certificate Information # # Example: # SSL_KEYSTORE_FILE_PATH=/etc/xasecure/conf/xasecure-hadoop-client.jks # SSL_KEYSTORE_PASSWORD=clientdb01 # SSL_TRUSTSTORE_FILE_PATH=/etc/xasecure/conf/xasecure-truststore.jks # SSL_TRUSTSTORE_PASSWORD=changeit # # # IF YOU DO NOT DEFINE SSL parameters, the installation script will automatically generate necessary key(s) and assign appropriate values # ONLY If you want to assign manually, please uncomment the following variables and assign appropriate values. # SSL_KEYSTORE_FILE_PATH= # SSL_KEYSTORE_PASSWORD= # SSL_TRUSTSTORE_FILE_PATH= # SSL_TRUSTSTORE_PASSWORD=

After configuring the install.properties file, install the

agent as root:

Log on to the Linux system as root and go to the directory where you extracted the installation files:

cd /tmp/xasecure/xasecure-$name-$build-version

Run the agent installation script:

# ./install.sh

Connected Agents display in the HDP Security Administration Web UI.

![[Note]](../common/images/admon/note.png) | Note |

|---|---|

Agents may not appear in the list until after the first event occurs in the repository. |

To verify that the agent is connected to the server:

Log in to the interface using the admin account.

Click > .

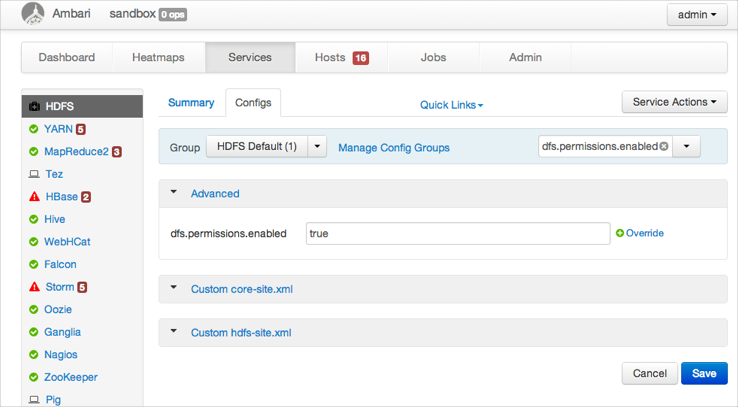

On Hadoop clusters managed by Ambari, change the default HDFS settings to allow the agent to enforce policies and report auditing events. Additionally, Ambari uses its own startup scripts to start and stop the NameNode server. Therefore, modify the Hadoop configuration script to include the Security Agent with a NameNode restart.

To configure HDFS properties and NameNode startup scripts:

Update HDFS properties from the Ambari Web Interface as follows:

On the Dashboard, click .

The HDFS Service page displays.

Go to the tab.

In Filter, type

dfs.permissions.enabledand press enter.The results display. This property is located under Advanced.

Expand Advanced, then change

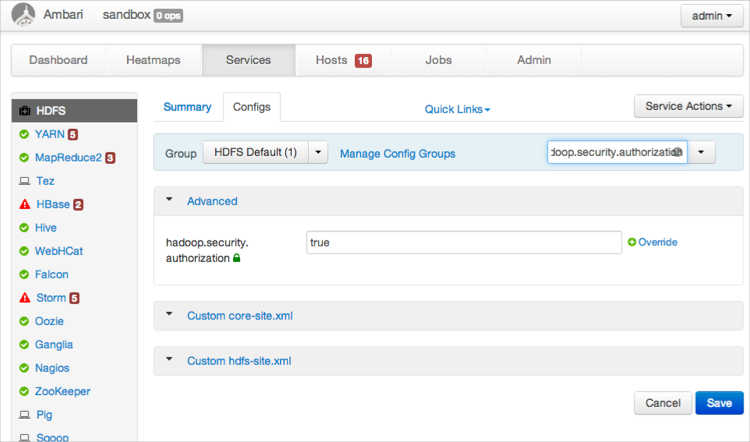

dfs.permissions.enabledtotrue.In Filter, type

hadoop.security.authorizationand press enter.Under the already expanded Advanced option, the parameter displays.

Change

hadoop.security.authorizationtotrue.Scroll to the bottom of the page and click .

At the top of the page, a message displays indicating the services that need to be restarted.

![[Warning]](../common/images/admon/warning.png)

Warning Do not restart the services until after you perform the next step.

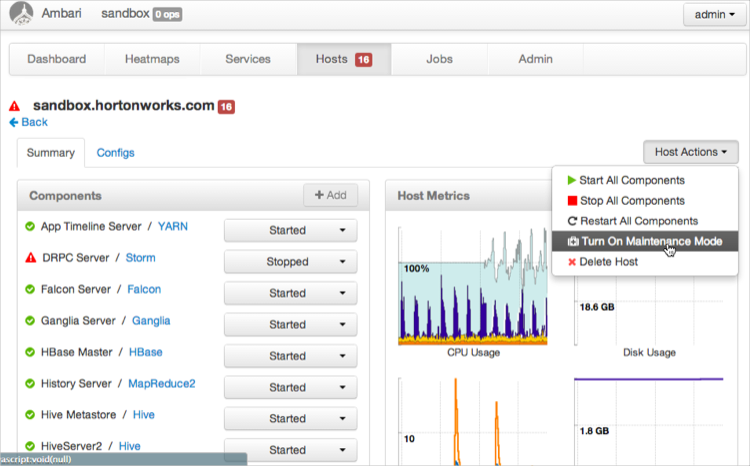

Change the Hadoop configuration script to start the Security Agent with the NameNode service:

In the Ambari Administrator Portal, click and then .

The NameNode Hosts page displays.

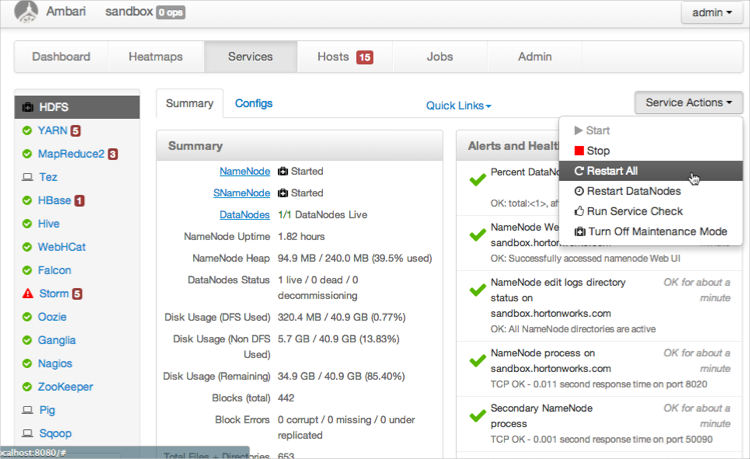

Click and choose .

Wait for the cluster to enter maintenance mode.

SSH to the NameNode as the

rootuser.Open the

hadoop-config.shscript for editing and go to the end of the file. For example:vi /usr/lib/hadoop/libexec/hadoop-config.sh

At the end of the file paste the following statement:

if [ -f ${HADOOP_CONF_DIR}/xasecure-hadoop-env.sh ] then . ${HADOOP_CONF_DIR}/xasecure-hadoop-env.sh fiThis adds the Security Agent for Hadoop to the start script for Hadoop.

Save the changes.

In the Ambari Administrative Portal, click > > .

Wait for the services to completely restart.

Click > > .

It may take several minutes for the process to complete. After confirming all the services restart as expected, perform a few simple HDFS comments such as browsing the file system from Hue.

The HDFS Agent is integrated with the NameNode Service. Before your changes can take effect you must restart the NameNode service.

On the NameNode host machine, execute the following command:

su -l hdfs -c "/usr/lib/hadoop/sbin/hadoop-daemon.sh stop namenode"

Ensure that the NameNode Service stops completely.

On the NameNode host machine, execute the following command:

su -l hdfs -c "/usr/lib/hadoop/sbin/hadoop-daemon.sh start namenode"

Ensure that the NameNode Service starts correctly.

After completing the setup of the HDFS Repository and agent, perform a few simple tests to ensure that the agent is auditing and reporting events to the HDP Security Administration Web UI. By default, the repository allows all access and has auditing enabled.

Log into the Hadoop cluster.

Type the following command to display a list of items at the root folder of HDFS:

hadoop fs -ls / Found 6 items drwxrwxrwx - yarn hadoop 0 2014-04-21 07:21 /app-logs drwxr-xr-x - hdfs hdfs 0 2014-04-21 07:23 /apps drwxr-xr-x - mapred hdfs 0 2014-04-21 07:16 /mapred drwxr-xr-x - hdfs hdfs 0 2014-04-21 07:16 /mr-history drwxrwxrwx - hdfs hdfs 0 2014-06-17 15:05 /tmp drwxr-xr-x - hdfs hdfs 0 2014-04-22 07:21 /user

Sign in to the Web UI and click .

The Big Data page displays a list of events for the configured Repositories.

Click > > HDFS.

The list filters as you make selections.