Using an external database for cluster services

Cloudbreak allows you to register an existing RDBMS instance as an external source to be used for a database for certain services. After you register the RDBMS with Cloudbreak, you can use it for multiple clusters.

Supported databases

If you would like to use an external database for one of the components that support it, you may use the database types and versions defined in the Support Matrix.

| Component | Embedded DB available? | How to set up your own DB |

|---|---|---|

| Ambari | By default, Ambari uses an embedded PostgreSQL instance. | Refer to Using Existing Databases - Ambari or to documentation for the specific version that you would like to use. |

| Druid | You must provide an external database. | Refer to Configuring Druid and Superset Metadata Stores in MySQL or to documentation for the specific version that you would like to use. |

| Hive | By default, Cloudbreak installs a PostgreSQL instance on the Hive Metastore host. | Refer to Using Existing Databases - Hive or to documentation for the specific version that you would like to use. |

| Oozie | You must provide an external database. | Refer to Using Existing Databases - Oozie or to documentation for the specific version that you would like to use. |

| Ranger | You must provide an external database. | Refer to Using Existing Databases - Ranger or to documentation for the specific version that you would like to use. |

| Superset | You must provide an external database. | Refer to Configuring Druid and Superset Metadata Stores in MySQL or to documentation for the specific version that you would like to use. |

| Other | Refer to the component-specific documentation. | Refer to the component-specific documentation. |

External database options

Cloudbreak includes the following external database options:

| Option | Description | Blueprint requirements | Steps | Example |

|---|---|---|---|---|

| Built-in types | Cloudbreak includes a few built-in types: Hive, Druid, Ranger, Superset, and Oozie. | Use a standard blueprint which does not include any JDBC parameters. Cloudbreak automatically injects the JDBC property variables into the blueprint. | Simply register the database in the UI. After that, you can attach the database config to your clusters. | Refer to Example 1 |

| Other types | In addition to the built-in types, Cloudbreak allows you to specify custom types. In the UI, this corresponds to the UI option is called "Other" > "Enter the type". | You must provide a custom dynamic blueprint which includes RDBMS-specific variables. Refer to Creating a template blueprint. | Prepare your custom blueprint first. Next, register the database in the UI. After that, you can attach the database config to your clusters. | Refer to Example 2 |

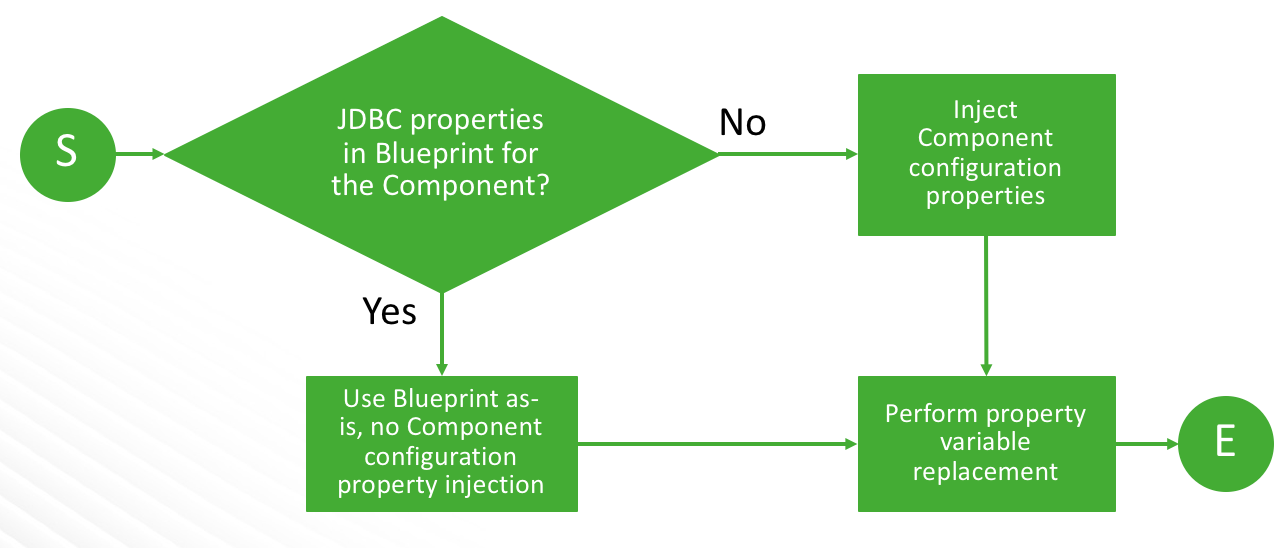

During cluster create, Cloudbreak checks whether the JDBC properties are present in the blueprint:

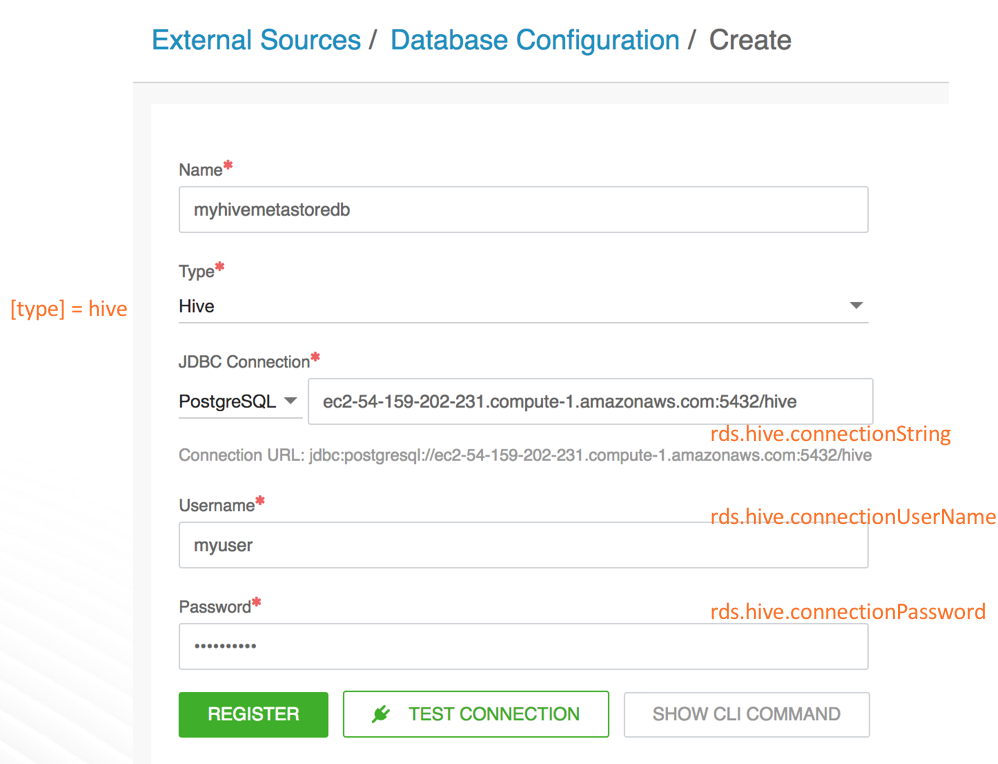

Example 1: Built-in type Hive

In this scenario, you start up with a standard blueprint, and Cloudbreak injects the JDBC properties into the blueprint.

-

Register an existing external database of "Hive" type (built-in type):

Property variable Example value rds.hive.connectionString jdbc:postgresql://ec2-54-159-202-231.compute-1.amazonaws.com:5432/hive rds.hive.connectionDriver org.postgresql.Driver rds.hive.connectionUserName myuser rds.hive.connectionPassword Hadoop123! rds.hive.fancyName PostgreSQL rds.hive.databaseType postgres -

Create a cluster by using a standard blueprint (i.e. one without JDBC related variables) and by attaching the external Hive database configuration.

-

Upon cluster create, Hive JDBC properties will be injected into the blueprint according to the following template:

... "hive-site": { "properties": { "javax.jdo.option.ConnectionURL": "{{{ rds.hive.connectionString }}}", "javax.jdo.option.ConnectionDriverName": "{{{ rds.hive.connectionDriver }}}", "javax.jdo.option.ConnectionUserName": "{{{ rds.hive.connectionUserName }}}", "javax.jdo.option.ConnectionPassword": "{{{ rds.hive.connectionPassword }}}" } }, "hive-env" : { "properties" : { "hive_database" : "Existing {{{ rds.hive.fancyName}}} Database", "hive_database_type" : "{{{ rds.hive.databaseType }}}" } } ...

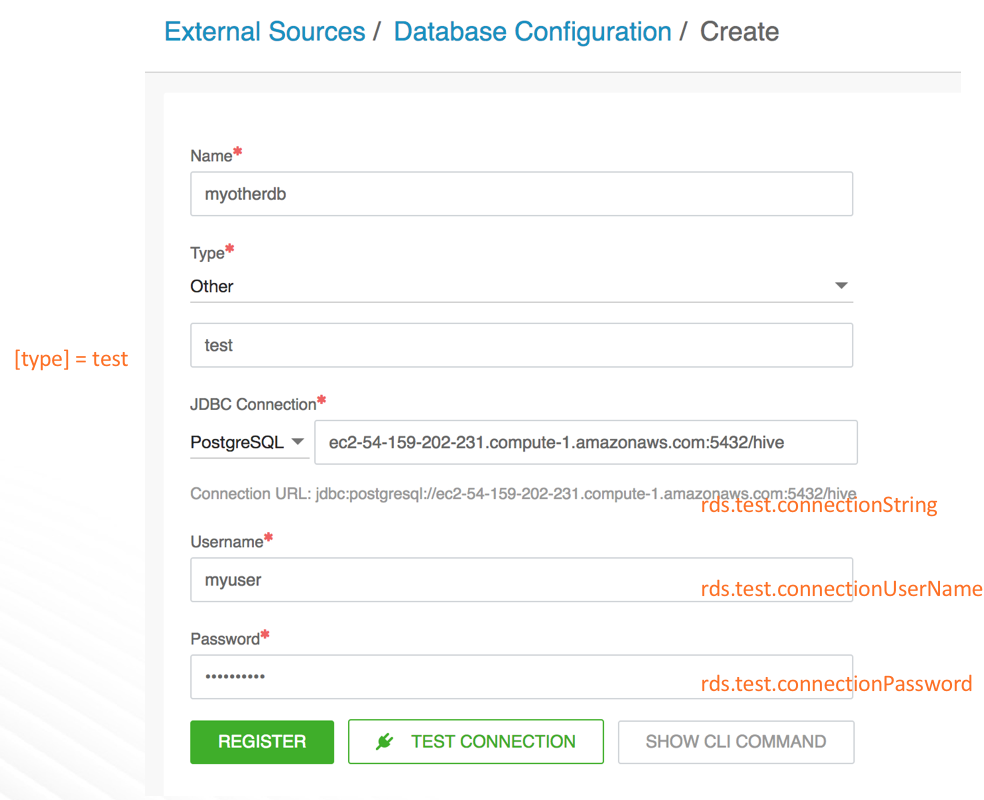

Example 2: Other type

In this scenario, you start up with a special blueprint including JDBC property variables, and Cloudbreak replaces JDBC-related property variables in the blueprint.

-

Prepare a blueprint blueprint that includes property variables. Use mustache template syntax. For example:

... "test-site": { "properties": { "javax.jdo.option.ConnectionURL":"{{rds.test.connectionString}}" } ... -

Register an existing external database of some "Other" type. For example:

Property variable Example value rds.hive.connectionString ec2-54-159-202-231.compute-1.amazonaws.com:5432/hive rds.hive.connectionDriver org.postgresql.Driver rds.hive.connectionUserName myuser rds.hive.connectionPassword Hadoop123! rds.hive.subprotocol postgres rds.hive.databaseEngine POSTGRES -

Create a cluster by using your custom blueprint and by attaching the external database configuration.

-

Upon cluster create, Cloudbreak replaces JDBC-related property variables in the blueprint.

Related links

Mustache template syntax

Creating a template blueprint for RDMBS

In order to use an external RDBMS for some component other than the built-in components, you must include JDBC property variables in your blueprint. You must use mustache template syntax. See Example 2: Other type for an example configuration.

Related links

Creating a template blueprint

Mustache template syntax (External)

Register an external database

You must create the external RDBMS instance and database prior to registering it with Cloudbreak. Once you have it ready, you can:

- Register it in Cloudbreak web UI or CLI.

- Use it with one or more clusters. Once registered, the database will now show up in the list of available databases when creating a cluster under advanced External Sources > Configure Databases.

Prerequisites

If you are planning to use an external MySQL or Oracle database, you must download the JDBC connector's JAR file and place it in a location available to the cluster host on which Ambari is installed. The steps below require that you provide the URL to the JDBC connector's JAR file.

If you are using your own custom image, you may place the JDBC connector's JAR file directly on the machine as part of the image burning process.

Steps

- From the navigation pane, select External Sources > Database Configurations.

- Select Register Database Configuration.

-

Provide the following information:

Parameter Description Name Enter the name to use when registering this database to Cloudbreak. This is not the database name. Type Select the service for which you would like to use the external database. If you selected "Other", you must provide a special blueprint. JDBC Connection Select the database type and enter the JDBC connection string (HOST:PORT/DB_NAME). Connector's JAR URL (MySQL and Oracle only) Provide a URL to the JDBC connector's JAR file. The JAR file must be hosted in a location accessible to the cluster host on which Ambari is installed. At cluster creation time, Cloudbreak places the JAR file in the /opts/jdbc-drivers directory. You do not need to provide the "Connector's JAR URL if you are using a custom image and the JAR file was either manually placed on the VM as part of custom image burning or it was placed there by using a recipe. Username Enter the JDBC connection username. Password Enter the JDBC connection password. -

Click Test Connection to validate and test the RDS connection information.

Note

The Test Connection option:

- Does not work when an external authentication source uses LDAPS with a self-signed certificate.

- Might not work if Cloudbreak instance cannot reach the LDAP server instance.

-

Once your settings are validated and working, click REGISTER to save the configuration.

- The database will now show up on the list of available databases when creating a cluster under advanced External Sources > Configure Databases. You can select it and click Attach each time you would like to use it for a cluster.